Introduction

You may have noticed some changes to this site. Along with some style and color changes, I’ve updated the domain, and focused the pages on my technical blog. Originally this site started as an administrative page for the Minecraft servers I am hosting. I built the first Minecraft server in Google Cloud on a general Compute Engine instance, and was running this web page on a separate smaller instance. As the player count increased, I had to increase the resources on the VM to keep performance stable, but this quickly used up the free credits. I did a blog post about the cost and the steps I took to optimize things. Eventually I moved the Minecraft servers to my homelab, where I had more compute resources available that wouldn’t incur a monthly cost. I did a detailed post about this as well.

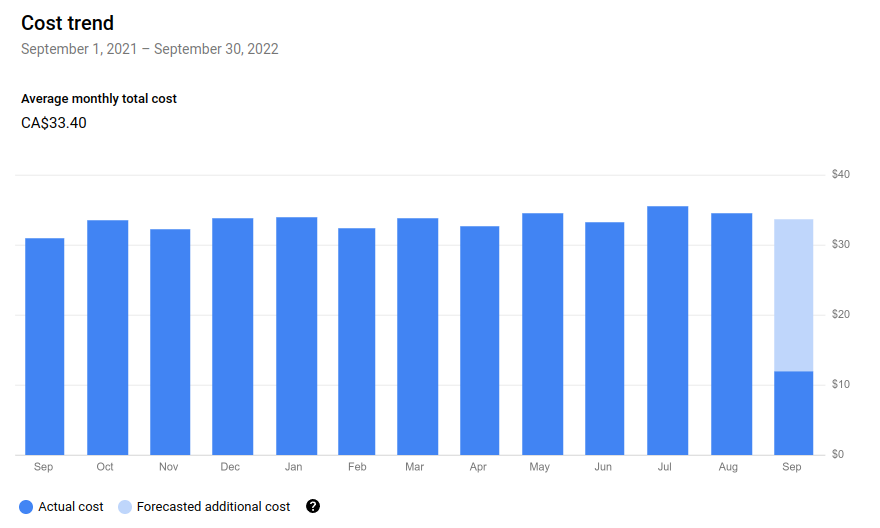

This website, as well as another personal site continued to run in GCP, however I was paying around $35 CDN a month to host everything in the cloud.

I’ve been focusing more on Amazon Web Services instead of Google Cloud Platform for my cloud experience recently, and last month was selected to join the AWS Community Builders program. One of the perks of the program is some additional free AWS credits, so I decided to take the opportunity to move this site to AWS to take advantage of using those up, as I seem to have an excess of them at this time. I’m sure most of the AWS credits will go towards experimentation and learning, but this is a consistent cost that they can be put towards as well.

GCP WordPress Instance

This site was built on an e2-micro Compute Engine instance, which has very limited resources. Normally this isn’t isn’t an issue as the CPU is burstable, but when performing large OS updates, it does take quite a while to finish.

Google Cloud provides one free e2-micro instance per month in their Free Tier, but with this and another website running, I exceeded the free tier.

I built the website server on the following LEMP stack:

- Linux – Debian 10 “Buster”

- NGINX lightweight web server

- MySQL (MariaDB)

- PHP 7.3 (later upgraded to 8.1)

I decided to use NGINX instead of Apache for the webserver, as it had a lower footprint, which made it more suitable for running on an e2-micro instance. I followed multiple guides to install WordPress on the server. This guide from DigitalOcean is probably the closest that I used.

AWS WordPress Instance

Deciding how to set up the AWS WordPress instance took quite a bit of thought. I had thought about using a more complex setup including an EC2 (Elastic Compute Cloud) instance, a VPC (Virtual Private Cloud), and serverless components like RDS (Relational Database Service) for the database, and S3 (Simple Storage Service) for file storage. Another way I had read about that could be challenging, was to set up WordPress on EKS (Elastic Kubernetes Service). I could also automate a lot of the setup using Terraform.

While these all sounded like excellent learning opportunities for the future, I decided against going this route, as I wanted a boring, stable, uncomplicated web server to host my articles. I also had to keep in mind that I wanted to be able to migrate the data from one WordPress instance to the new one, and the more complex the architecture, the more potential compatibility issues or points of failure there would be.

I ended up going with the AWS Lightsail platform for a number of reasons:

- There is a Bitnami AWS Lightsail WordPress image which is a very similar stack to the one I manually set up. I had used it for another website, and it was fairly straightforward, running on Debian 11 “Bullseye”, which I prefer. It use Apache, MariaDB, and PHP 8.0, so it is more up to date.

- Using this pre-built setup would save time, as all of the versions would be tested before release, and should remain stable, rather than me having to build my own stack from scratch.

- This would make the migration from my old WordPress instance easier, and would allow me to upgrade later on with new system images.

- If this migration plan worked, I could use it to migrate other sites to with the same steps.

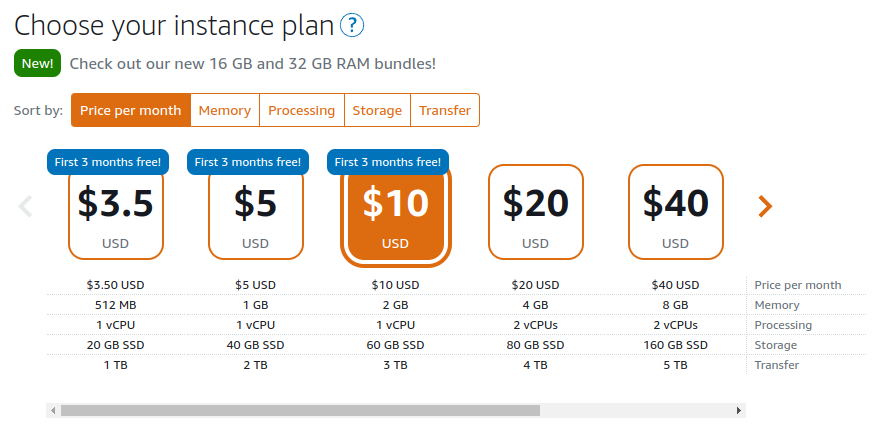

- AWS Lightsail has functionality for a static IP address, automatic daily snapshots, and a low cost of about $10/month for a decently sized instance.

- There is the option to increase the virtual server resources, and even upgrade to EC2 in the future if necessary.

- Lightsail has additional features such as CDN (Content Delivery Network) Distribution similar to Amazon CloudFront, and the option for Load Balancers or a managed database if needed. For the low traffic of my site, this will probably not be necessary for a long time.

Based on my requirements for simplicity and cost, AWS Lightsail seems like a good choice for hosting my WordPress sites.

Exporting the WordPress Database

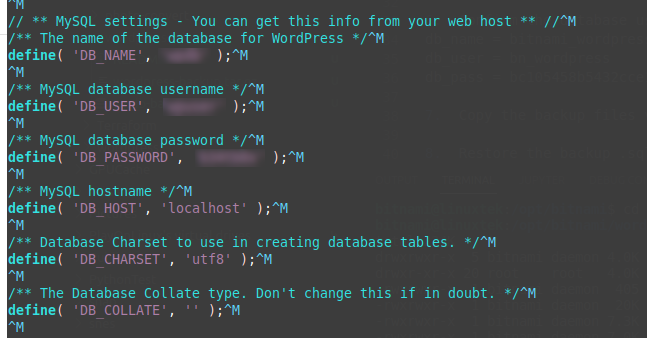

The first step of the migration was to export the database, which was running locally in the GCP Compute Instance on MariaDB. To refresh my memory on the database details, I checked the wp-config.php file located in /var/www/html/wordpress, which had the following sections with the database name, username, and password:

To back up the database, I used the following command to dump it to a file using the values from the wp-config.php file. When run, it prompted for the DB_PASSWORD value. You can enter the password as an argument on command line, but that wouldn’t be considered secure.

mysqldump -u DB_USER -p DB_NAME > /home/user/wordpress-backup.sqlBacking Up the WordPress Directory

The only folder we needed to be concerned about on the old server was the /var/www/html/wordpress directory. The web server itself would be changed from NGINX to Apache, and the Bitnami image would have everything configured already. This directory includes all of the configuration, settings, posts, media uploads, content, etc from the older server.

In the /home/username directory, I ran the following command to back up the folder to a tar.gz archive file:

tar -czvf wordpress-backup.tar.gz /var/www/html/wordpressThe switches used perform the following:

-z : Compress archive using gzip program in Linux or Unix

-c : Create archive on Linux

-v : Verbose i.e display progress while creating archive

-f : Archive File nameCreating WordPress Server on AWS Lightsail

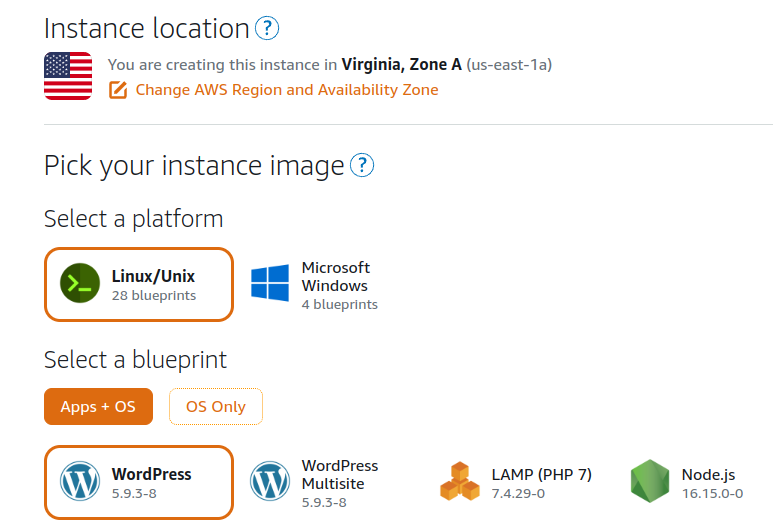

From the AWS Console search for “Lightsail” or just navigate to lightsail.aws.amazon.com. Although it is clickops, since this work won’t be frequently repeated or the resources temporary, I created the instance using the web interface. I clicked “Create Instance” and followed the menus to choose the platform, and WordPress as the blueprint:

I changed the SSH keypair by deleting the default id_rsa, and uploaded my own public SSH key, so that I could access the server without using a password.

I enabled Automatic Snapshots to occur daily, and chose an off-peak time for them to run.

I chose the instance plan that seemed like the best value for the usage I expected. This could always be modified or scaled up later on.

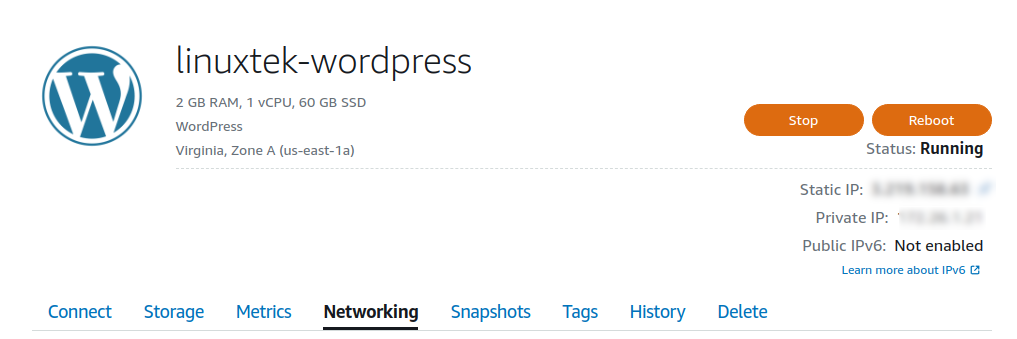

I named the instance “linuxtek-wordpress” and created the instance. Once the instance was created, I clicked Networking, created a Static IP, and attached it to the newly created instance. This would ensure that I could use the IP reliably without it changing.

Under Networking, I also adjusted the IPv4 firewall to restrict SSH access to my public IP only, so nobody else can attempt to connect. I also disabled IPv6 networking, as it didn’t seem necessary. By default, port 80 and 443 are open to allow web traffic, so no changes were needed here.

Once the Lightsail instance was set up, I was able to SSH to the server using the username bitnami.

I adjusted the timezone and hostname using the following commands:

sudo timedatectl set-timezone America/Toronto

sudo hostnamectl set-hostname linuxtek.caI then used SCP to copy over the database backup and wordpress folder archive to the new server.

Restoring WordPress Data to the New Server

Because the new AWS server was created via a template, I didn’t know the generated usernames or passwords that had been set up. Fortunately, Bitnami puts a number of files in the /home/bitnami directory that are helpful. This documentation can also be helpful. I was able to get the database name, username, and password from the /opt/bitnami/wordpress/wp-config.php file as on the previous server.

For compatibility, I didn’t want to adjust any of the database settings, so I restored my backup from the dump file right on top of the existing database using this command (entering the database password when prompted):

mysql --user bn_wordpress --database bitnami_wordpress --password < wpdb-backup.sqlI then stopped the Bitnami stack, which controls all of the WordPress services. I needed them running to be able to manipulate the database, but for file level changes, I wanted them stopped, so I ran the following:

sudo systemctl stop bitnamiNote: There is also a control script at /opt/bitnami/ctlscript.sh which can be used to restart individual services, or stop/start all services, but I found it easier to use systemctl.

Once the services were stopped, I deleted the /opt/bitnami/wordpress folder, then recreated it and extracted the archive tar.gz file into it. I used the –strip-components flag so the archive would dump the contents of the backed up /var/www/html/wordpress folder without including the full file path:

sudo rm -rf /opt/bitnami/wordpress

mkdir /opt/bitnami/wordpress

tar xvfz /home/bitnami/wordpress-backup.tar.gz -C /opt/bitnami/wordpress --strip-components=4Once everything was extracted, I recursively adjusted the permissions and ownership of the files and folders to work with the Bitnami stack. The Bitnami documentation explains this a bit, as files and directories are owned by the user bitnami, and group daemon. There are a number of requirements for the files and directory permissions, which can be set using the commands provided below:

sudo chown -R bitnami:daemon /opt/bitnami/wordpress

sudo find /opt/bitnami/wordpress -type d -exec chmod 775 {} \;

sudo find /opt/bitnami/wordpress -type f -exec chmod 664 {} \;

sudo chmod 640 /opt/bitnami/wordpress/wp-config.php

sudo systemctl start bitnamiUpdating Domain Name in WordPress

Because I was switching from using a subdomain to the full domain, I needed to update all references from minecraft.linuxtek.ca to just linuxtek.ca. This would ensure that all links in all published articles would work, and all images and other references would be consistent.

I found two ways to do this without using a plugin or script:

The wp-cli has an option that can do a search an replace with the following command:

sudo wp search-replace 'http://olddomain.com' 'http://newdomain.com' --precise --recurse-objects --all-tables

For me this was:

sudo wp search-replace 'https://minecraft.linuxtek.ca' 'https://linuxtek.ca' --precise --recurse-objects --all-tablesThe other way was to directly edit the database tables (which was more reliable). This article (Method #2) goes through the steps to access the MySQL CLI to run the commands. I again took the db_name, db_user, and db_pass values from the wp-config.php file to log in:

mysql -u db_user -p database_nameOnce logged in to the mysql> prompt, I ran the following commands:

UPDATE wp_options SET option_value = replace(option_value, 'http://olddomain.com', 'http://newdomain.com') WHERE option_name = 'home' OR option_name = 'siteurl';

UPDATE wp_posts SET guid = replace(guid, 'http://olddomain.com', 'http://newdomain.com');

UPDATE wp_posts SET post_content = replace(post_content, 'http://olddomain.com', 'http://newdomain.com');

UPDATE wp_postmeta SET meta_value = replace(meta_value,'http://olddomain.com', 'http://newdomain.com');

\q;After each of the commands, it would return the number of rows matched and changed. I ran a few queries to make sure that the values were correct, then again restarted the bitnami stack with sudo systemctl restart bitnami.

Updating Domain Names in DNS Provider

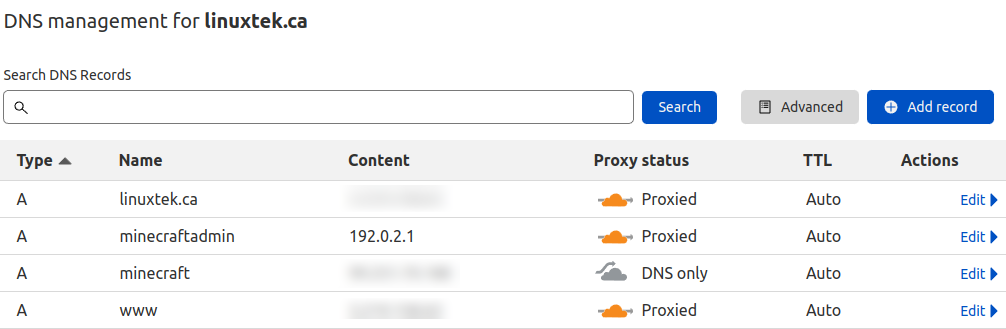

I use CloudFlare for some of my DNS services, which also provides some caching and DDOS protection. If using Amazon Route 53, or another DNS provider, the domain names and IPs would need to be updated in your provider so they would resolve properly.

Under my domain DNS settings, I updated the following:

- The www A record would resolve to the static IP of the new Lightsail server. It is proxied through CloudFlare for DDOS protection.

- The linuxtek.ca A record would resolve to the static IP of the new Lightsail server. It is proxied through CloudFlare for DDOS protection.

- I updated the minecraftadmin subdomain A record to point to a non-routeable IP 192.0.2.1, as I wanted it to redirect to the main linuxtek.ca domain (more on that below).

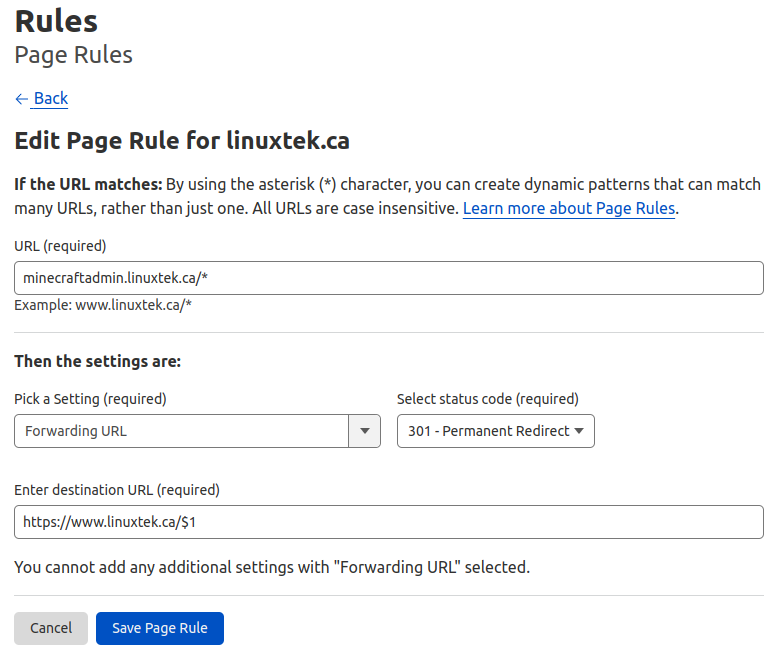

To ensure that any references to the old subdomain redirect to the new one, I set up a Page Rule to redirect the traffic with an HTTP 301 response. This tutorial video explains the details but I’ve included the steps below. Note that I left the A record intact but to an invalid IP, so the traffic would be proxied by CloudFlare, which must be the case.

The $1 is used as a variable, so the URL path value from the second asterisk will be added to the redirected URL, so any full URL from the original domain should redirect now to the new one. I used the dummy IP 192.0.2.1 which is recommended by Cloudflare.

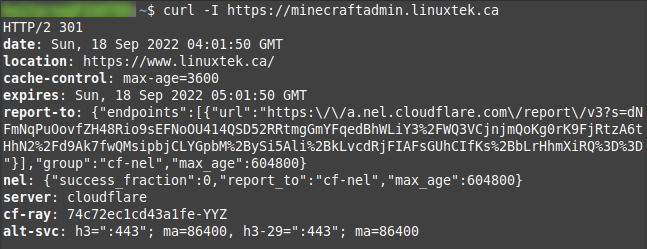

Once the DNS records and Page Rule refreshed and were active (I had to flush my PiHole DNS cache) , I was able to test this with the curl command, and see the HTTP 301 redirect from minecraftadmin.linuxtek.ca to linuxtek.ca:

Another tip that helped, was testing DNS resolution using the DNS server 1.1.1.1, which can have the cache purged at this link. More information is available in this blog post.

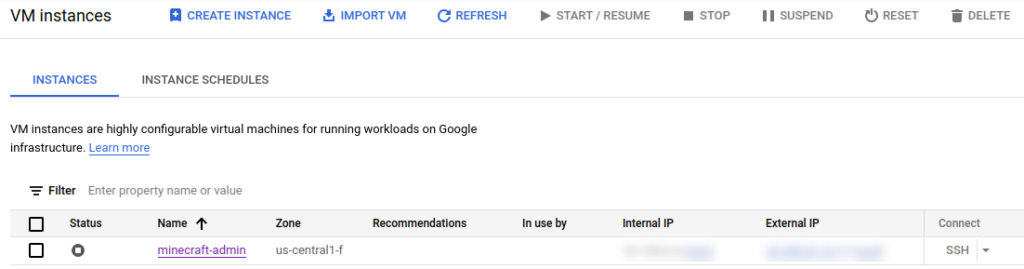

Once I could confirm the web traffic was redirecting properly to the AWS instance, I shut down the GCP Compute Engine instance:

Additional Troubleshooting and Debugging

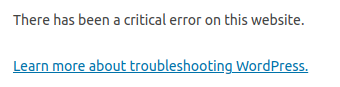

I did run into a few errors while trying to bring up the server after migration. On the initial load up, I ran into this error:

This message wasn’t very helpful, so I changed the debugging flag in wp-config.php from false to true:

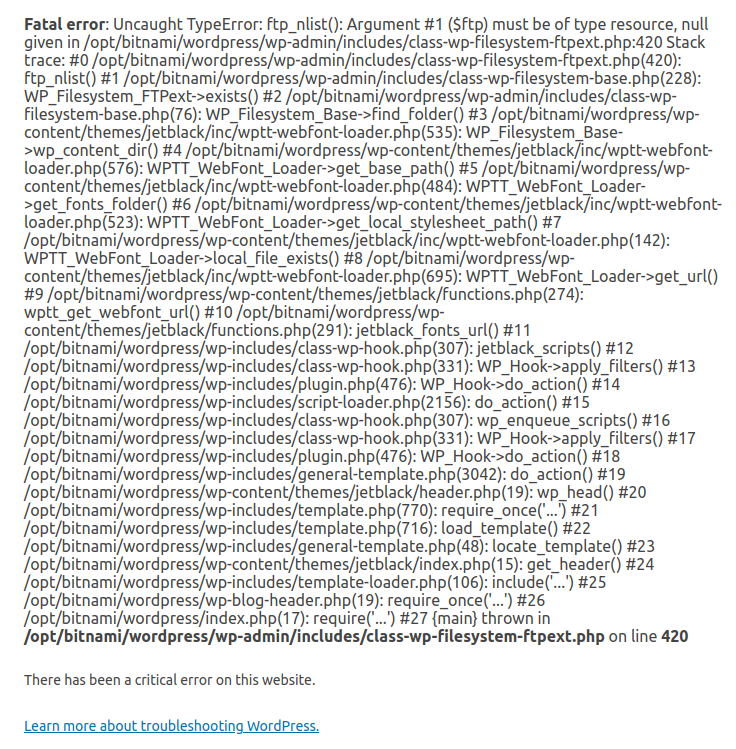

define( 'WP_DEBUG', true);After restarting services, and refreshing, I got this error:

After researching the error, to resolve this, I had to add this line at the bottom of the wp-config.php file, and restart services:

define('FS_METHOD', 'direct');Don’t forget to set the WP_DEBUG back to false as well, as it does show debug into on multiple pages that you wouldn’t expect otherwise, even if the server is working.

Also pro tip – don’t attempt to write a blog post on your site as you take it up and down to get screenshots – you’ll probably lose some of your drafts!

Conclusion

With a bit of manual manipulation, it is possible to migrate a WordPress server between hosts without too much difficulty. I will likely add some backup scripts to automate backing up the /opt/bitnami/wordpress folder, and the WordPress database; and save them to an S3 bucket. Although I have automated snapshots, this would be an extra layer of protection in case of a failure, and could be easily used to upgrade the server to a new image when it becomes available.

Hopefully this article helps some of you in doing similar tasks!

![]()