TL;DR

If you’re running vSphere 7.0.3 (or any other hypervisor), and upgrade Ubuntu Desktop 22.04 from kernel 5.15 to 6.5, after a reboot, you may find your graphics not working anymore, and you will just get a black screen after the Ubuntu boot logo. Don’t panic! Your install is not corrupted, and there are multiple ways to fix this below.

Introduction

Recently I started teaching part time at a local college. This semester, I’m teaching a class on Linux Server. A couple of students had mentioned corrupted installs where their Linux VMs failed to boot. A hard reboot would show the VM starting to boot into Ubuntu, showing the logo, flash some of the disk check data, then go to a black screen. Unfortunately, we didn’t have a lot of time to troubleshoot during classes, and I didn’t get a chance to dig into the problem until tonight, as I was able to get access to one of the VMs in this state.

Now I had mainly moved away from supporting VMware software the last few years. I mainly work with cloud systems these days, and I had migrated my homelab to Proxmox early last year after the Broadcom acquisition was announced. Considering much of the recent news, this was a prescient decision. I had also mainly moved away from doing technical support, focusing on cloud engineering and DevOps. Alas…

Diagnosing the Issue

So initially I thought this might have been a resource constraint issue – running out of disk, RAM, or a corrupted disk causing boot failure. Many crashes could be due to allocating more memory to VMs than is available to the user. I tried a few times to get into the GRUB bootloader menu to get to rescue/emergency mode, but none of the common keycommands were working (more on this later).

So instead, I attached an Ubuntu 22.04 Desktop Live CD to the VM, booted into that, then mounted the Ubuntu install disk to poke at the logs. Once I was in the Live CD environment, I opened a terminal, and ran lsblk to determine which disk was the main install. I ran sudo mount /dev/sda3 /mnt to mount the disk, and then went into /var/log to have a look at dmesg and the kernel log file kern.log.

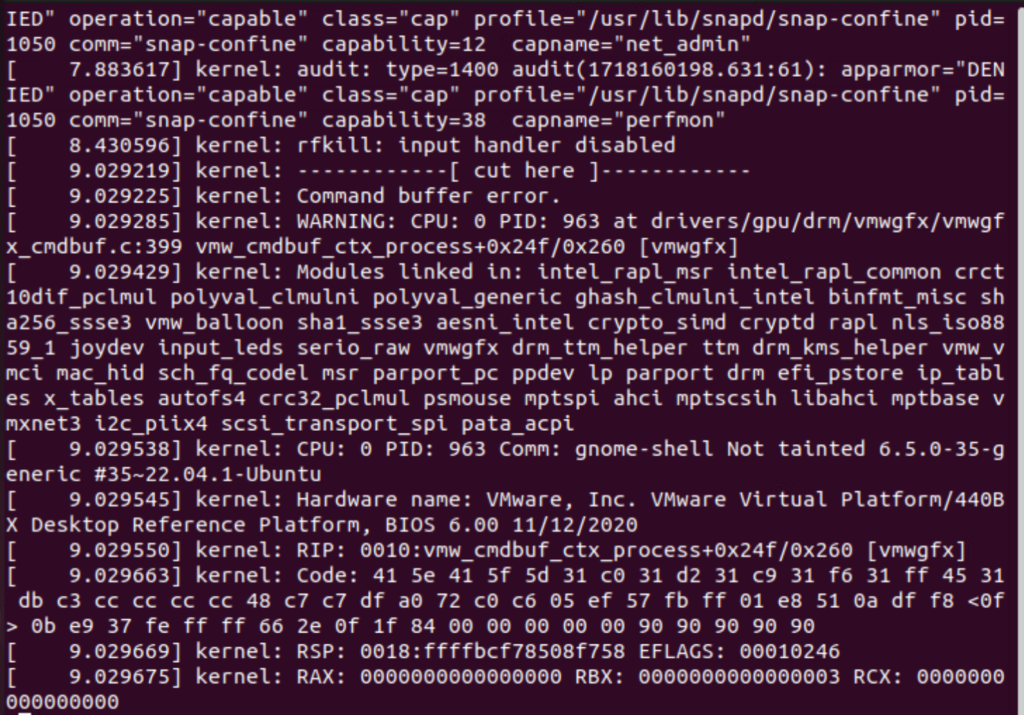

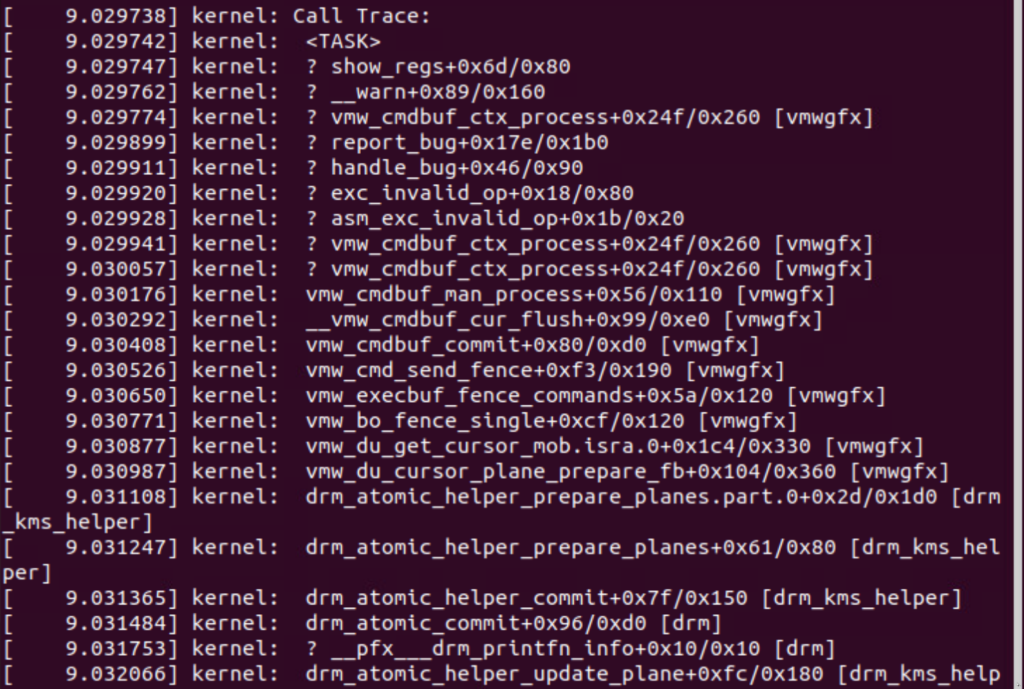

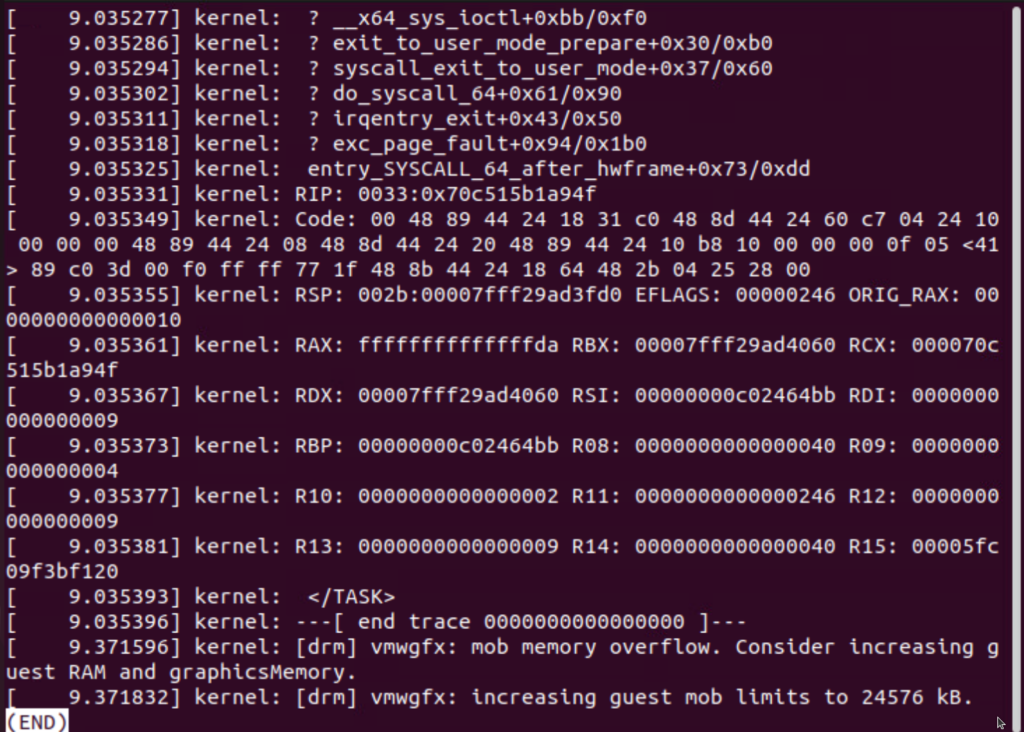

The dmesg output looked fairly normal, but I could see some errors and stack traces in the kernel log. No kernel panic though:

The top screenshot indicated a warning for vmwgfx, and the bottom screenshot describes the problem:

kernel: Command buffer error.

kernel: WARNING: CPU: 0 PID: 963 at drivers/gpu/drm/vmwgfx/vmwgfx_cmdbuf.c:399 vmw_cmdbuf_ctx_process...

kernel: [drm] vmwgfx: mob memory overflow. Consider increasing guest RAM and graphicsMemory.

kernel: [drm] vmwgfx: increasing guest mob limits to 24576 kB.A quick search on the error found this kernel patch note which helped me understand why the last two lines were being printed. This thread on the VirtualBox forums gave me some ideas to try.

Fix #1 – Boot Older Kernel (hard)

The first thing I wanted to try was to boot an earlier kernel version. I was having a lot of trouble getting my keystrokes to catch during a reboot of the VM, so while in the LiveCD, I tried to adjust the GRUB settings to give me more time to choose boot options. I mounted the following directories into the mounted filesystem:

sudo mount --bind /dev /mnt/dev

sudo mount --bind /sys /mnt/sys

sudo mount --bind /proc /mnt/procThen I used chroot to change the environment to run as if it was on this OS install:

sudo chroot /mntI edited the following lines in /etc/default/grub to change the boot options:

GRUB_TIMEOUT_STYLE=menu

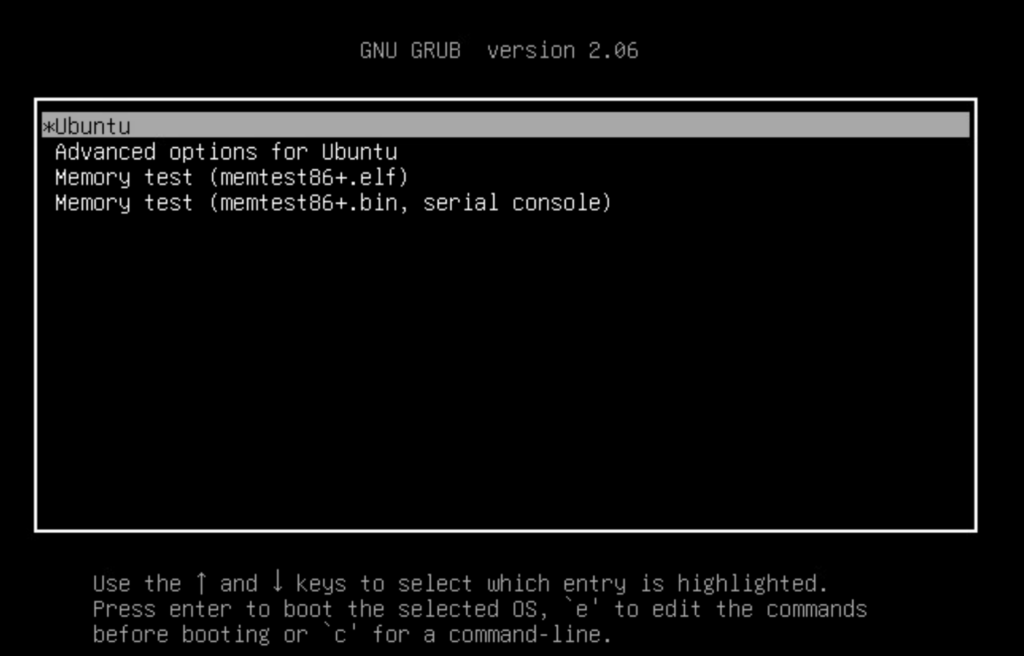

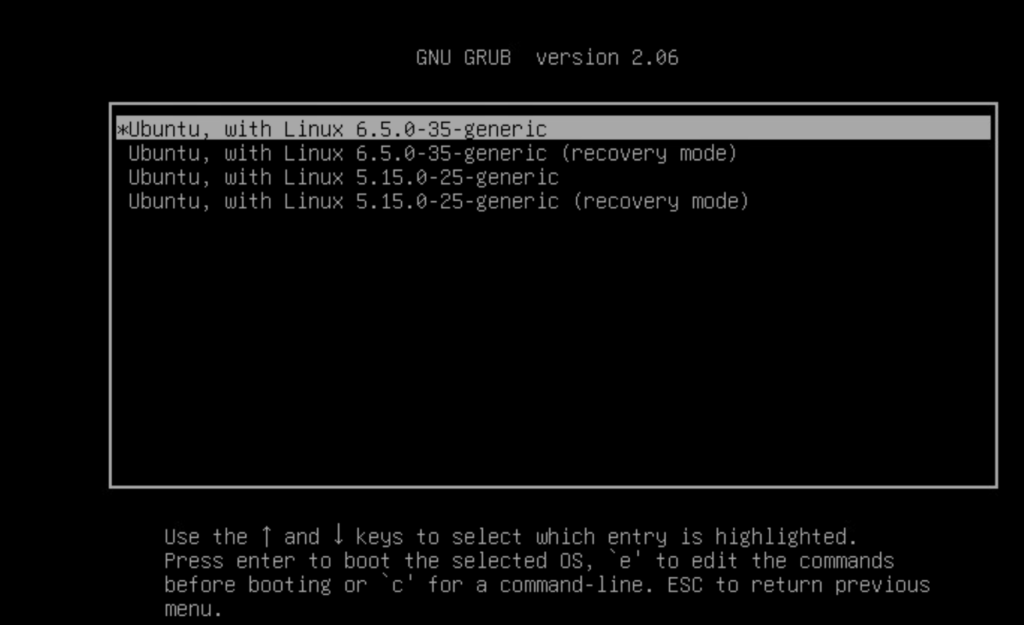

GRUB_TIMEOUT=-1This would ensure that the GRUB boot menu would always come up, and I wouldn’t have to try to switch screens and focus my window in time to hold the SHIFT key to get the menu to come up (it turned out to just be a timing issue). Once I made the change, I ran sudo update-grub to make the change take effect, then rebooted the VM. The VM booted and paused right on the GRUB menu, and under “Advanced options for Ubuntu”, I was able to choose an earlier kernel version, and boot into the graphical environment successfully.

Reproducing The Issue

After successfully fixing the existing VM, I wanted to see if this was somethings specific to this VM, or something I could reproduce. I installed a brand new Ubuntu 22.04 Desktop (Jammy Jellyfish) from the LiveCD, and it booted up fine. I ran the following commands to update to the latest I could:

sudo apt update && sudo apt full-upgradeAfter a reboot, I ran into the exact same problem as the other VM. So it wasn’t just a one off.

Fix #2 – Increase Video Memory (easy)

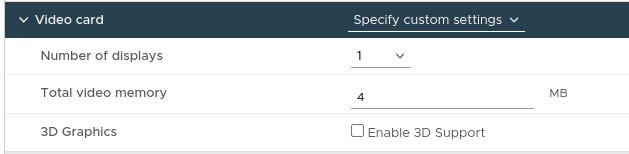

Now the original kernel error had indicated “Consider increasing guest RAM and graphicsMemory.” The VM I built had 8GB of RAM, and 6.5GB free, so that wasn’t the issue. So I tried increasing the video memory. In the vSphere VM settings, I took a look at the video card settings, which weren’t changed during the setup, and they defaulted to 4MB:

I shut the VM down, changed this to 8MB, then started it back up. The graphical interface started up properly without issue, and I confirmed I was running on the 6.5.0-35-generic kernel.

Conclusion

While this didn’t dig into all of the details on why this issue happened, I found it helpful to outline the troubleshooting process, and touch on some of the commands and resources used. Coincindentally, one of the upcoming classes will focus on the Linux boot process, so this will be a good example to review.

I’ve included some of the resources I read through below. I did find a couple of generic VMware KB articles that indicated a problem between vmwgfx and Wayland, but no real error messages to pin down the cause.

Resources

- drm/vmwgfx: Be a lot more flexible with MOB limits

- vmwgfx failure with Ubuntu kernel upgrade (6.5.0-14)

- Kernel.org Bugzilla – Main Page

- Ubuntu Forums – How do I run update-grub from a LiveCD?

- VMware KB-334539 – Higher screen resolution causes freeze or black screen for Ubuntu 17.10 Wayland 2D VM with default (4MB) video memory

![]()